2 September | 2 min read

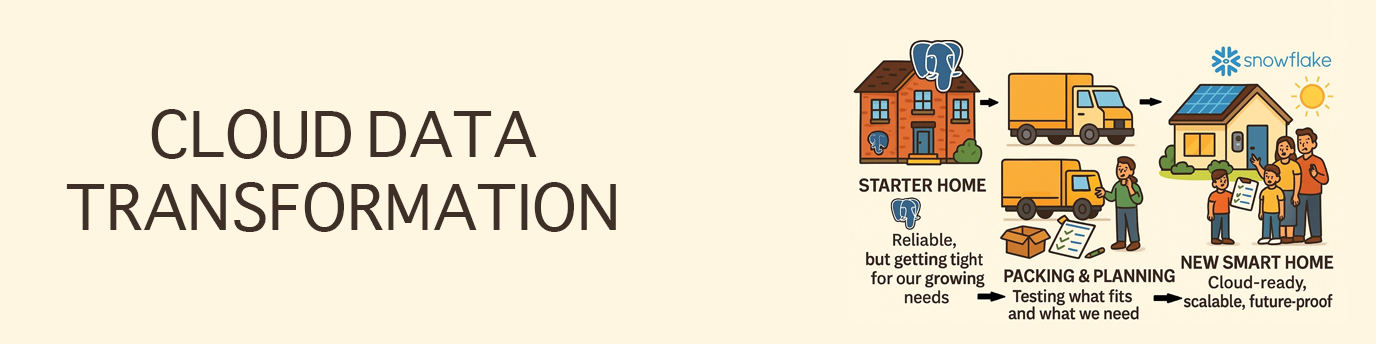

Not every data journey begins with a grand strategy. Sometimes it starts quietly—with a bit of curiosity, a few limitations surfacing, and a sense that the current tools just aren’t cutting it anymore.

A few months ago, one of our clients found themselves at that point. Their on-prem systems were starting to feel heavy. With data volumes growing and new use cases pushing against the limits of what they had, it was clear that change wasn’t just a nice-to-have anymore – it was necessary. That’s when Snowflake came into the picture.

To test the waters, we kicked off a small proof of concept. No big bang migration, no fanfare. Just a focused attempt to move a lightweight workload from PostgreSQL to Snowflake. We wanted to keep it manageable so we could really understand how the pieces would fit together.

We used AWS Airflow to orchestrate the flow, pulling data out of PostgreSQL and writing it as flat files to Amazon S3. That S3 layer acted as our handoff point. From there, Snowflake pipelines and tasks took over—ingesting, transforming, and cleaning the data inside the platform. It was simple by design, but incredibly useful. We weren’t just testing compatibility – we were proving that this architecture could handle real-world workflows, end-to-end.

The project came with real-world context too. We weren’t building in isolation. This was part of a financial services initiative—specifically fintech—so everything we touched had to be scalable, compliant, and secure. The stakes were higher, and the constraints tighter, but that just made the outcome more satisfying. Clean pipelines don’t just look good on paper – they help us move faster, catch issues earlier, and make better decisions as a business.

Now that the foundations are in place, our sights are set on what comes next. There’s plenty more to explore—scaling pipelines, automating more of the process, even experimenting with real-time ingestion and machine learning workflows in the future. But even now, it’s already clear that this small beginning was worth it.

Looking back, it’s easy to forget how simple things started—a small PoC, a few flat files on S3, and a desire to just make things better. But that’s how good engineering stories begin. With a bit of curiosity, and a team willing to try something new.